I think there is another way of looking at this set of data, though

I'm not convinced that the "skill level" has changed drastically between 2013's rubbish skill and 2014's. budding meteorologists.

2014 happened to be a year with a very high cumulative anomaly but importantly almost all in the same direction. So (at least in my case) the only skill was thinking that this was a longish term trend and going highish every month - which has worked like a charm this year

A better way of assessing skill would be to match the average error against the STANDARD DEVIATION of the monthly anomalies (this is a measure of the degree of scatter pf the anomalies from the best fit line). 2014 with 10 or 11 anomalies in the same direction has a high cumulative value but a low standard deviation. (This also handles adding + and - figures together OK)

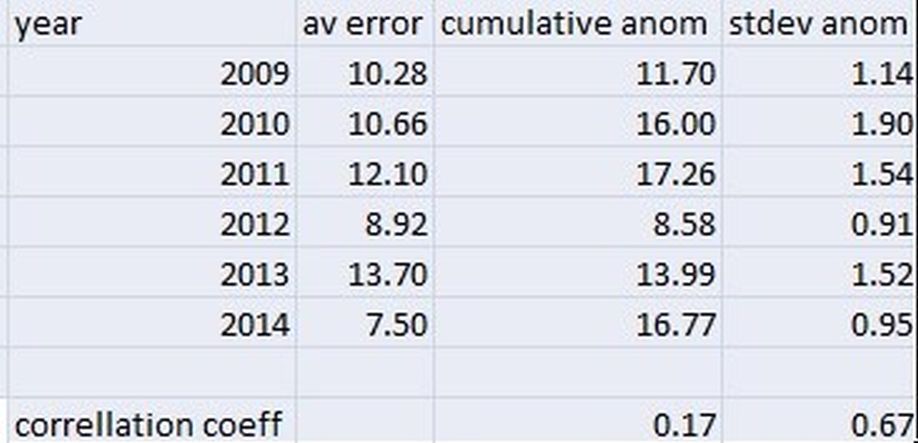

If you look at that way of doing it, you find a reasonable fit between the average error and the standard deviation of the anomaly - and much better than the fit between error and cumulative anomaly. The table below shows the correlation coefficient (how good a fit there is, 0=no fit and 1=perfect fit) between these two measures

Incidentally if 2010 is removed to get rid of the horrendous errors from Dec 2010 (CET anomaly of -5.8C vs 1971-2000) then the correlation coefficient of the STDEV goes up to 0.94

So as a rule of thumb, 2012 and 2014 have been quite easy years and 2010, 2011 and 2013 difficult based on Standard Deviation of anomaly and they have higher error rates

Originally Posted by: lanky